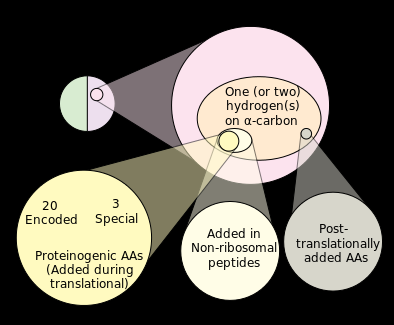

Despite its breathtaking diversity at the morphological level, life on Earth displays a remarkable unity at the biochemical level. With few exceptions, all lifeforms employ DNA as their hereditary material, proteins constructed from the same 20 types of amino acids as their building blocks, and RNA to bridge the two worlds through the genetic code.

As the morse code describes the relationship between dots/dashes and the Latin alphabet, so does the genetic code describe the relationship between the codons of DNA and the amino acids of proteins. Like French, the language of the cell is easy: The codon “GUU” specifies valine, and it’s like that all the way through. If in doubt, consult the figure below.

The genetic code is almost universal; a number of variants have been found, all of which are derived from the standard genetic code (Osawa et al., 1992; Knight, Freeland, & Landweber, 2001). In other words, there are no precursors to the genetic code.

The genetic code as evidence for common descent?

The near-universality of the genetic code has been cited as evidence for universal common ancestry (Crick, 1968; Hinegardner & Engelberg, 1963). According to geneticist Theodosius Dobzhansky, these biochemical universals are “the most impressive” evidence for the interrelationship of all life (1973, p. 128).

And indeed, from the non-teleological perspective this makes sense: If undirected abiogenesis had occurred several times, it would be an amazing coincidence if in every case the resulting organisms had struck upon the same genetic code. Therefore, universal common ancestry is the best explanation.

This changes the moment we throw teleology into the mix. Rather than having to choose between common descent and convergence, the investigator must now also consider the possibility of common design.

The genetic code as example of common design

Suppose that the first life on Earth consisted of a diverse population of engineered cells. Why would engineers employ the same genetic code instead of giving each cell its own code?

Before answering this question, let us ask a counter-question: Why not? What would the point be, from an engineering perspective, to reinvent the wheel? Making multiple codes is extra work and increases the risk of mistakes when genes have to be designed in different languages.

Not only is there no reason for engineers to adopt multiple codes, there is good reason to use the same code. If different cell types used different codes, they would be unable to tap into the power of horisontal gene transfer (HGT).

HGT plays an essential role in bacterial evolution, where genetic models indicate that substantial HGT is required for the survival of bacterial populations (Takeuchi, Kaneko, & Koonin, 2014). Though less common in eukaryotes, HGT is not restricted to bacteria. For example, a study found that ferns adapted to shade by horisontal transfer of a gene from the moss-like hornworts from which they diverged 400 million years ago (Li et al., 2014). HGT may even have played a role in the evolution of humans, with seaweed-digesting genes from ocean bacteria having found their way into the gut microbes of Japanese individuals (Hehemann et al., 2010).

In other words, categorizing the standard genetic code as an example of common design is not an ad hoc rationalization; rather, there is a good engineering reason for reusing the code.

A code facilitated by molecular machines

Microbiologist Franklin M. Harold describes the genetic code as “one symbolic language translated into another, with the aid of a universal apparatus of quite phenomenal sophistication” (2014, p. 222).

And indeed, the molecular machinery for translating DNA into proteins is quite impressive: In bacteria, the process requires RNA polymerase to unwind the DNA double helix and transcribe its sequence to messenger RNA, sigma factor to regulate the activity of RNA polymerase, 20 different types of transfer RNA, one for each amino acid; as well as the ribosome, the protein synthesis factory of the cell, where the messenger RNA and the matching transfer RNA’s are lined up, and the amino acids linked together, assembly-line style.

In eukaryotes, the process is even more complicated.

However, a purely physical description of the complexity involved in protein synthesis ignores the conceptual exceptionalism of the genetic code, as pointed out by Harold: One symbolic language translated into another.

This makes the genetic code a prime candidate for design. As Mike Gene points out, “experience has shown us that codes typically are the products of mind, and non-teleological forces do not generate codes. In fact, if the genetic code is taken off the table, there is no evidence that a conventional code employing a linear array of symbols has ever been spawned by a non-teleological force.” (2007, p. 281)

An exceptionally good code

Is there a reason why, say, “GUU” should specify valine? Or is the standard genetic code little more than a “frozen accident”?

Even a casual look at the genetic code indicates that there is a method in the madness. Thus, one amino acids is often specified by similar codons, as in the case of leucine, which is specified by CUU, CUC, CUA, and CUG. Thus, a substitution mutation in the last letter of the codon will have no effect on which amino acid is specified.

This logic extends to a deeper level. For example, a mutation in one of the other letters may result in phenylanine (UUU, UUC), an amino acid with similar chemical properties to leucine.

In other words, the standard genetic code seems to be constructed in such a way as to make the organism robust to the effects of mutations. But is there a way to quantify the level of this optimization and compare the standard code to other possible codes?

In 2000, a team of scientists led by Stephen J. Freeland of Princeton University published such an analysis. They concluded that with respect to substitution mutations, the standard genetic code “appears at or very close to a global optimum for error minimization: the best of all possible codes” (Freeland et al., 2000, p. 515).

Substitution mutations are not the only type of mutation, though. In a frameshift mutation, a letter is added or deleted, disrupting the reading frame downstream of the mutation site. The result is a random string of amino acids that can gunk up the cell. Especially harmful are frameshift mutations that eliminate the “stop” codon, resulting in a string of random gunk that can be quite long.

The standard genetic code has as many as three “stop” codons, which seems excessive, considering that there is only one “start” codon. But having three “stop” codons instead of one increases the chances that a new “stop” codon will be encountered downstream in the case of a frameshift mutation.

The standard genetic code is made even more robust to frameshift mutations by having the sequence of the “stop” codons overlap with those of the codons specifying the most abundant amino acids. This feature, as Itzkovitz and Alon conclude, makes the standard genetic code “nearly optimal” at minimizing the harmful effects of frameshift mutations:

“We tested all alternative codes for the mean probability of encountering a stop in a frame-shifted protein-coding message. We find that the real genetic code encounters a stop more rapidly on average than 99.3% of the alternative codes.” (Itzkovitz & Alon, 2007, p. 409)

An interesting perspective is provided by a recent study by Geyer and Mamlouk (2018). Comparing the standard genetic code with one million random codes, they found that when measuring for robustness against the effects of either point mutations or frameshift mutations, the standard genetic code is “competitively robust” but “better candidates can be found”. However, “it becomes significantly more difficult to find candidates that optimize all of these features - just like the SGC [standard genetic code] does.” The authors conclude that when considering the robustness against the effects of both point mutations and frameshift mutations, the standard genetic code is “much more than just ‘one in a million’.”

The genetic code is likely the result of a compromise between providing robustness against several types of mutations. If the standard genetic code is the product of a teleological process, I expect future analyses which incorporate and compare different types of robustness - like Geyer and Mamlouk (2018) - will further support the optimality of the standard code.

In the meantime, we can conclude that the standard genetic code is exceptionally good - one in a million and possibly better.

Did the genetic code evolve?

As we have just seen, the genetic code displays a remarkable level of optimization when it comes to protecting the organism from the effects of mutations. This fact is hard to reconcile with a view of the genetic code as nothing but a frozen accident. If the genetic code was not engineered, it must have been optimized by natural selection, going through countless codes before happening on the one employed by life today.

But we find no evidence of this long trek through the fitness landscape. All life today employs the same code, and the few variants that exist are all derived from the standard code, not precursors to it.

It is possible that the lineage in which the standard genetic code arose drove all those other lineages with precursor codes extinct. But that is hard to square with the fact that variants of the code exist today, with no evidence of being driven to extinction by their superior-coded competitors. Changing an organism’s genetic code may be hard (as evidenced by the limited number of variants), but once changed, the variant does not seem to significantly decrease the organism’s fitness. At least not to the extent where one genetic code drives all competitors around the globe to extinction.

Other explanations can no doubt be formulated. But explaining the absence of evidence only establishes the possibility of the code being the product of an evolutionary process (a possibility I already accept). They do not establish that such a process actually took place.

Conclusion and perspectives

The genetic code is a prime candidate for design. It is a symbolic language, translated into another by molecular machines. The universality of the code can easily be explained as a case of common design, as there is a good engineering reason for reusing the code. Furthermore, the code appears to be exceptionally good at protecting organisms from the effects of mutations - one in a million or better.

The evidence is thus consistent with a scenario in which the first life on Earth consisted of a diverse population of engineered cells, all of which used the standard genetic code. The variants of the code which we observe today are secondarily derived from this original code.

This scenario generates predictions, potentials for falsification, and avenues for further research.

For example, Freeland et al. (2000) conclude that the standard genetic code can only be considered “the best of all possible codes” if the set under consideration is limited to those codes where amino acids from the same biosynthetic pathway are assigned to codons sharing the first base, for which the researchers give the historical explanation that the current code is expanded from a primordial code. If this pattern persists (i.e. it is not an artifact of the researchers only looking at robustness against substitution mutations) the teleological scenario would expect there to be good engineering reasons to group amino acids from the same biosynthetic pathway together like this. On the other hand, if more sophisticated models underscore the need for historical explanations and/or show the standard genetic code to be mediocre, the teleological scenario will be in trouble.

The teleological scenario also predicts that all organisms have the standard genetic code or derivatives thereof. Scientists estimate that Earth has about one trillion microbial species, with 98 percent yet to be discovered. If, as we start finding and studying those, we find multiple variants of the standard genetic code that can reasonably be considered as precursors, the teleological scenario will once again be in trouble.

Thus, we see that teleological explanations, rather than being vacuous “the designer did it” proclamations, can generate testable insights about nature.

References

Crick F.H.C., 1968, “The Origin of the Genetic Code”, Journal of Molecular Biology 38(3):367-379

Dobzhansky T., 1973, “Nothing in Biology Makes Sense except in the Light of Evolution”, The American Biology Teacher 35(3):125-129

Freeland S.J., Knight R.D, Landweber L.F., & Hurst L.D., 2000, “Early Fixation of an Optimal Genetic Code”, Molecular Biology and Evolution 17(4):511-518

Geyer R. & Mamlouk A.M., 2018, “On the Efficiency of the Genetic Code after Frameshift Mutations”, PeerJ 6:e4825

Gene M., 2007, The Design Matrix: A Consilience of Clues, Arbor Vitae Press

Harold F.M., 2014, In Search of Cell History: The Evolution of Life’s Building Blocks, University of Chicago Press

Hehemann J.-H., Correc G., Barbeyron T., Helbert W., Czjzek M., & Michel G., 2010, “Transfer of Carbohydrate-Active Enzymes from Marine Bacteria to Japanese Gut Microbiota”, Nature 464(8937):908-912

Hinegardner R.T. & Engelberg J., 1963, “Rationale for a Universal Genetic Code”, Science 142(3595):1083-1085

Itzkovitz S. & Alon U., 2007, “The Genetic Code is Nearly Optimal for Allowing Additional Information within Protein-Coding Sequences”, Genome Research 17(4):405-412

Knight R.D., Freeland S.J., & Landweber L.F., 2001, “Rewiring the Keyboard: Evolvability of the Genetic Code”, Nature Reviews Genetics 2(1):49-58

Li F., Villareal J.C., Kelly S., Rothfels C.J., Melkonian M., Frangedakis E., Ruhsam M., Sigel E.M., Der J.P., Pittermann J-, Burge D.O., Pokorny L., Larsson A., Chen T., Weststrand S., Thomas P., Carpenter E., Zhang Y., Tian Z., Chen L., Yan Z., Ying Z., Sun X., Wang J., Stevenson D.W., Crandall-Stotler B.J., Shaw A.J., Deyholos M.K., Soltis D.E., Graham S.W., Windham M.D., Langdale J.A., Wong G.K.-S., Mathews S., & Pryer K.M., 2014, “Horizontal Transfer of an Adaptive Chimeric Photoreceptor from Bryophytes to Ferns”, Proceedings of the National Academy of Sciences 111(18): 6672-6677

Osawa S., Jukes T.H., Watanabe K., & Muto A., 1992, “Recent Evidence for Evolution of the Genetic Code”, Microbiological Reviews 56(1):229-264

Takeuchi N., Kaneko K., Koonin E.V., 2014, “Horizontal Gene Transfer Can Rescue Prokaryotes from Muller’s Ratchet: Benefit of DNA from Dead Cells and Population Subdivision”, G3 (Bethesda) 4(2):325-339