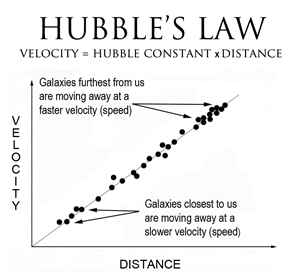

If you consider objects at increasing distances from the Earth, to a decent first approximation, they follow Hubble’s law:

The equation for this is:

v=H_0d

Where v is the recessional velocity, H_0 is the Hubble constant and d is the distance of the astronomical object. The Hubble constant has a value somewhere around 70 (km/s)/Mpc. You will note that this has units of distance/time divided by distance (or inverse time). It is a slightly odd way to write the constant, but it can be quickly used to calculate how fast an object at a certain distance is receding from the Earth due to the Universe’s expansion.

Side note: to calculate this more precisely, you should use the Friedmann equation, which describes how the Hubble expansion changes as a function of the density of stuff inside the universe, but that is beyond the scope of my post here.

Back to our estimate, we want to know at what distance does:

H_0d>c

where c is the speed of light. Doing a little aligning of units gets you d>4.3 GPc which is 4.3 gigaparsecs or about 14 billion light years which is what you found in the article posted above. Put another way, an object that is presently at or more than 14 billion light years away is presently receding away from us faster than the speed of light (assuming a constant Hubble expansion rate). This cutoff point wouldn’t necessarily change for most of the universe’s history. However, with a now accelerating expansion (unless dark energy is more interesting than a cosmological constant), this number will gradually start decreasing. In principle, the most distant galaxies that we can presently observe (now further thanks to JWT) will gradually become more and more redshifted until they disappear from our sight entirely.

None of this answers your questions per se about when did cosmic expansion exceed the speed of light, but here is a short video that talks about some of these things:

To answer your question can also be done I suppose to a first (very simple) approximation by asking this question, now that we found the magic distance of about 14 billion light-years where we then say how long would it take with an object’s speed continually increasing as it moved away from us (or the location where Earth would someday be) to reach this magic cutoff point of 14 billion light years away. Maybe we could write something like this:

dx(t)/dt=H_0x(t)

The main idea here is that the velocity is turned into the first time derivative of distance from Earth, and the distance from Earth changes as a function of time.

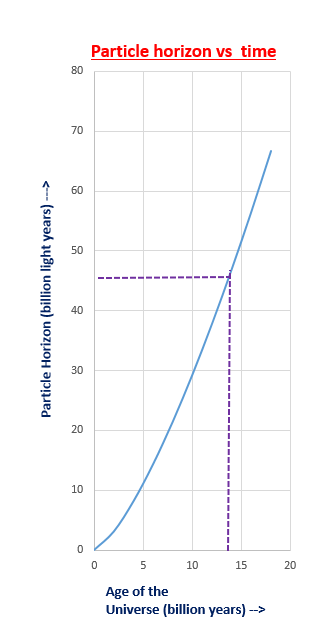

Edit: usually this is done via the scale factor of the universe, but a plot of this distance (also called the Particle Horizon) is shown here:

Source explaining this in more detail:

So @Dale, it looks like to answer your question the universe first passed the Hubble radius about 8 billion years ago.