Jay313

(Jay Johnson)

94

I’ve seen anecdotal evidence similar to your experience. Here’s one example I posted 3 days ago in

another thread:

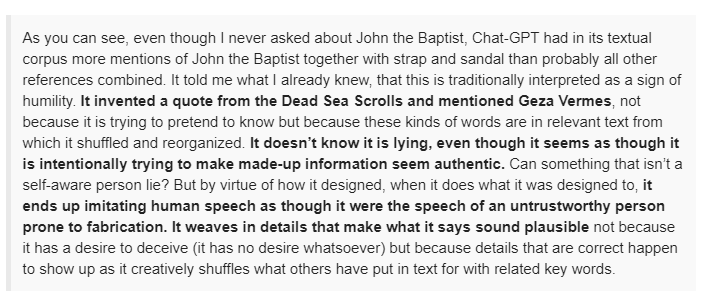

To quote McGrath’s results (emphasis mine):

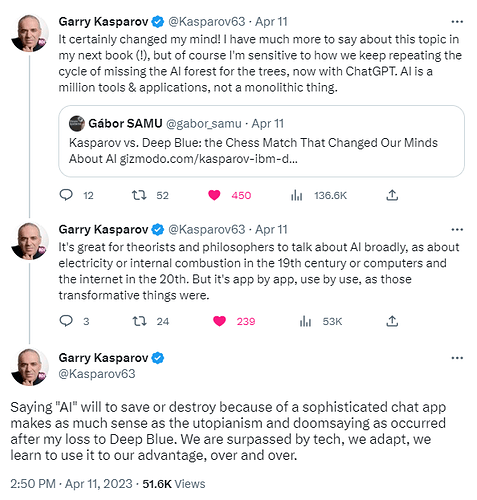

Ultimately, I agree with what Kasparov says here about his 1997 loss to IBM’s Deep Blue chess engine:

App by app, use by use. Chat apps (like ChatGPT) are designed to mimic human communication, not replace it. They’re also not search engines.

4 Likes